The Project Page | Github | Replicate API

The project's objective was to create consistently looking character concepts from two angles. This could be helpful for concept artists when they need to quickly re-imagine certain outfits in Ready Player Me style. Inspired by CharTurner embeddings, the initial idea was to just retrain Stable Diffusion (SD) model using Dream Booth.

Dataset

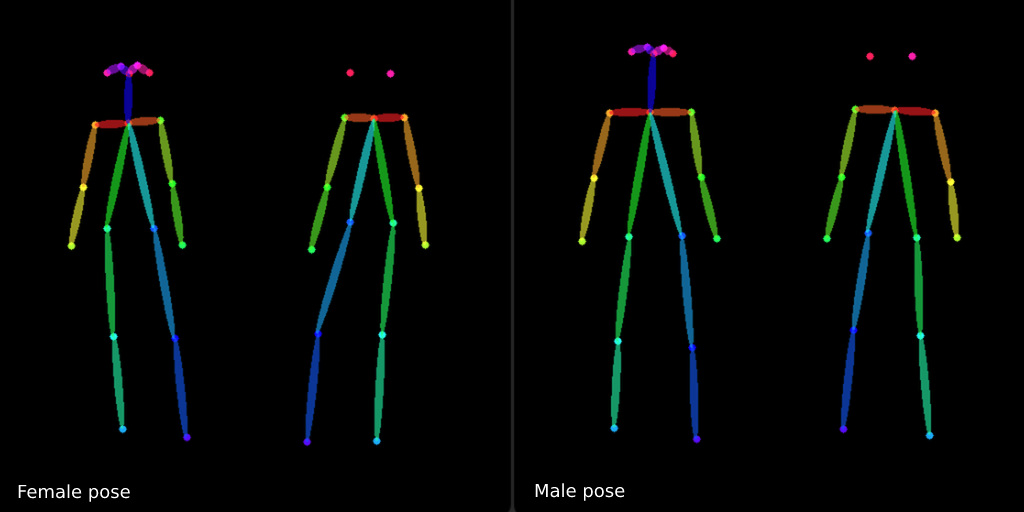

I created a small dataset of posed male and female characters that

consisted of approximately 85 images. My original dataset already had consistent poses per male and female characters as you can see in the examples below.

Model training & hacks

I utilized TheLastBen's colab notebook for the training script. Male and female character subsets used separate tokens during training. I didn't annotate each image with a proper description of the clothing, race, hairstyle. I just used the same tokens per all the females and all the male characters. I'm sure the model could have been trained better if I provided those detailed descriptions per image.

Nevertheless, the first model was able to generate consistently posed characters in 80% of cases without the use of ControlNet. Characters were also quite diverse and responded fine to the prompts. This model was based on SD2.1 and generated 768 resolution images. It did not learn the faces however and it spoiled the impression of the results.

I still think that 2.1, 768 resolution model gives the best quality generations in comparison to 1.5 models.

However, after ControlNet's announcement, I re-trained SD 1.5 model on a 512 resolution dataset to use it with available ControlNet. I also added female and male faces to the dataset.

Fixing faces

Despite adding faces to the dataset, I still could not achieve good quality faces on the generated full-body images. Thus I used a simple trick — I cut and upscaled the face region and pasted it back to the generated image with a blending mask. It worked because the model already knew how RPM faces look and was able to render them only on a close up region.

Increasing diversity and adjusting the style

To achieve nice looking style and more diversity of generated garments,

I merged my overfitted RPM model with another model OpenJourneyV4.

You can see how merging those models with different weights influenced the final style.

You may notice that despite using ControlNet with even increased weight,

I couldn't retain the proportions of the character. The general proportions have changed significantly as a result of the models merge.

Here you can see the same seed result for RPM, RPM*0.8 + 0.2*OpenJourneyV4, RPM*0.6+0.4*OpenJourneyV4, OpenJourneyV4.

For my future application I decided to continue with the style: RPM*0.8 + 0.2*OpenJourneyV4 (image 2).

Maintaining the pose

Stable Diffusion with DreamBooth can preserve the pose, but in 80% of cases. To make it reliable, I use ControlNet. A precomputed pose for each gender is always used for every generation.

Building with Diffusers 🤗

For the first experiments, I used local Automatic1111 API, a popular open-source project with lots of extensions. However, it could have been a huge pain to deploy it on Replicate. Thus, I re-implemented the logic using Diffusers. Together with a simple TypeScript interface, this API allowed users to select up to three styles (pre-filled prompts), avatar gender, and input a prompt.

How it works

The exact predictions code you can find on Github. Here I'm just sharing some step-by-step description of what I do.

- I use

StableDiffusionControlNetPipelinewith "lllyasviel/control_v11p_sd15_openpose" with custom pretrained Stable Diffusion 1.5. I sent the pose of selected gender into ControlNet condition and weighted prompt to get 512 image. - It's worth to mention how I emulated Automatic1111 experience with prompts.

There you can amplify the influence of certain words using brackets syntax like this:

(word). The community contributed a special pipeline to diffusers. I found that if the model was not perfectly trained, it might loose the ability to react to prompts well enough. This is exactly what happened with my custom model, thus I enhance the whole prompt by default. In the code below the mentioned pipeline used inget_weighted_text_embeddings. - I resize initial generation to 1024 and use it with

StableDiffusionImg2ImgPipelineto render the details of the full image. - After that I cut face area from image and run it through the same Img2Img pipeline with some additional prompt.

- I blend enhanced facial image back to the upscaled generated image.

Gallery

Finally, here's a showcase of some of the most impressive character generations created using this system. First, these are characters created by the originally trained SD model before the merge.

RPM style original

0.8 RPM style + OpenJourneyV4

And here are the results of the merge. The level of details, soft shadows and rendering in general is significantly better.

More

If you are interested to see more generations of this project, you may visit

- The Project Page — infinite gallery of generated characters;

- Github — Cog creation / predicitons code for Replicate;

- Replicate API — Publicly available API;

Thanks for reading:)